Over the past two years, companies have invested heavily in automation, AI tooling, OCR, and workflow engines. On paper, these investments promised lower OPEX, fewer manual hours, less operational dependency, and cleaner data.

But privately, more CFOs are asking the same question:

“If we automated so much, why are our operational costs not going down?”

The truth is straightforward:

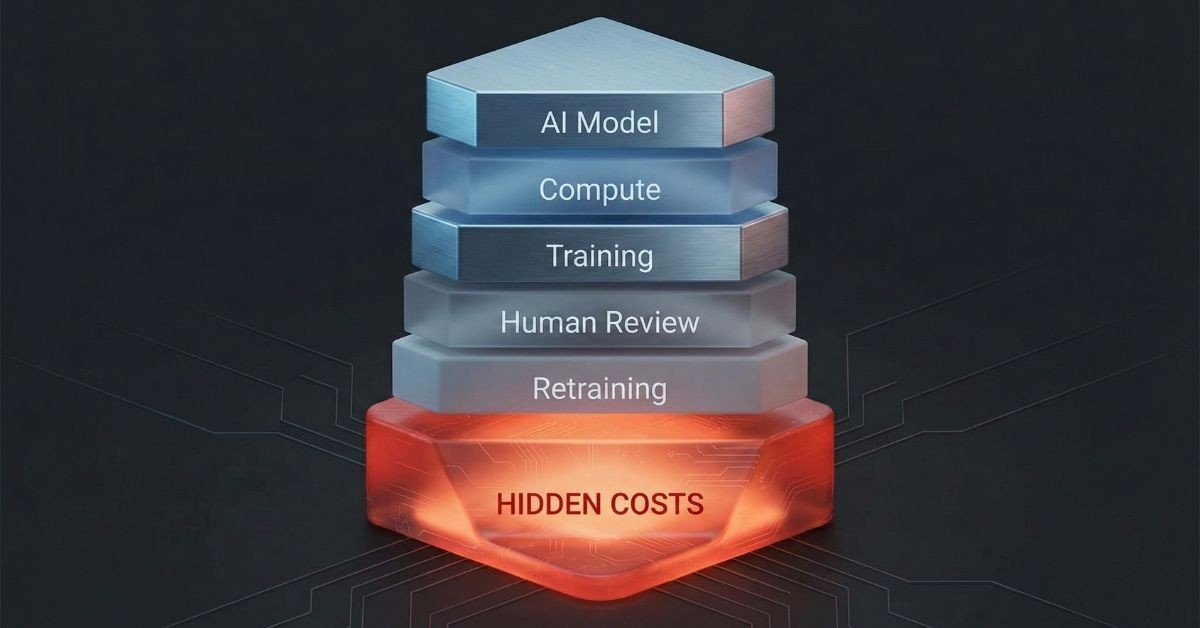

AI reduces visible workload, but it increases hidden workload.

This hidden layer is what we call Operational AI Debt, the silent, compounding cost created when AI outputs require human correction, validation, and exception handling.

In most enterprises, this debt isn’t tracked, budgeted, or even acknowledged. Yet it directly affects SLAs, payroll hours, customer experience, compliance exposure, and OPEX.

This article breaks down the five biggest hidden AI costs CFOs miss and how to fix them with a Hybrid HITL approach.

AI has a predictable failure pattern:

It works perfectly with clean, standardized data and breaks down when anything outside that standard is encountered.

Here are the cost leaks that most CFOs never see in financial reporting:

AI is fast, but not always accurate. Every misread field, misclassified document, or incorrect routing creates a rework cycle.

Example from a real client scenario:

A FinTech company automated KYC verification. The AI handled clean passports and license scans well, but struggled with:

Internal staff ended up validating 24–28% of cases manually, more time than before automation.

Rework isn’t recorded as “AI cost.” It simply appears as overtime, backlog, or delays.

But financially, it is true operational leakage.

Most AI engines run on decision thresholds. Anything below a confidence score gets pushed into a manual review queue.

CFOs never see that queue.

But operations teams do every day.

Real example:

A logistics brand automated freight documentation.

During peak weeks, 12–15% of BOL documents fell into exceptions because:

Each exception took 3–7 minutes to resolve.

Multiply that by tens of thousands of shipments, and the “invisible” cost is huge.

AI ingestion errors compound over time. A tiny mistake upstream becomes a significant distortion downstream.

Example:

A SaaS analytics client discovered that a 1% timestamp mismatch in event logs led to:

This “data drift” isn’t visible on a CFO dashboard, but it directly impacts decision quality.

Bad data = bad forecasting = financial losses.

AI is not reliable with edge cases or high-risk data.

A single misread field can violate GDPR, HIPAA, or financial reporting rules.

Example:

An EU insurer had its AI misread date formats (DD/MM vs MM/DD).

This created:

Compliance mistakes eat budgets faster than any operational error.

This is the most painful truth companies don’t admit:

Engineers spend more time auditing AI workflows than improving products.

Real-world logs include:

One client’s engineering team spent 40–60 hours every month validating ingestion patterns even though the process was “fully automated.”

This is the definition of hidden OPEX.

Because automation dashboards are designed to show progress, not problems.

Let’s break down the visibility gap:

Automation vendors highlight:

But these figures hide the real cost:

The remaining 2–10% requires disproportionate human time.

Teams do not report “micro-corrections.”

They simply absorb them.

Over 6 months, this creates a shadow workload CFOs never see.

AI shifts work, it doesn’t eliminate it.

People simply move from performing tasks, fixing the AI’s interpretation of those tasks.

The most costly zone, the accuracy layer, doesn’t have an owner.

Cost leaks fall through the cracks.

Here are the four zones where AI breaks every time:

Different systems - different formats

Different vendors - different templates

AI struggles with:

OCR and LLM-based extraction fail with:

These failures create huge manual queues.

AI frequently misclassifies:

Misclassification cascades into downstream errors.

AI doesn’t “decide.”

It gives a confidence score.

Below threshold - human review.

Above threshold but still inaccurate - errors pass through undetected.

Both create cost.

Hybrid Human-in-the-Loop (HITL) operations do NOT replace automation.

They stabilize it.

Here’s how:

Fix issues at ingestion - avoid cascading errors.

This cuts 40–60% of rework.

Structured exception categories reduce manual backlogs.

Instead of chaotic queues, HITL provides predictable workflows.

Data analysts + QA teams correct AI’s errors in:

Stable data - accurate reporting.

HITL teams build reusable rules:

This prevents AI from making the same mistake twice.

Here’s a formula that finally reveals the cost:

Operational Debt = Error Rate x Volume x Cost Per Error

Example:

Operational Debt = $84,000/month

Or OVER $1M/year in hidden leakage.

This is the number CFOs never see but must.

AI is powerful but fragile.

Without human validation layers, automation produces:

Enterprises don’t need “more AI.”

They need governed AI, reinforced by Hybrid Human-in-the-Loop operations.

If your AI-driven workflows are creating unseen cost leakage, the fastest way to uncover it is to run a structured audit.

Request a Data Accuracy & Operational Debt Audit with TRANSFORM Solutions.

Trust TRANSFORM Solutions to be your partner in operational transformation.

Book a free consutation