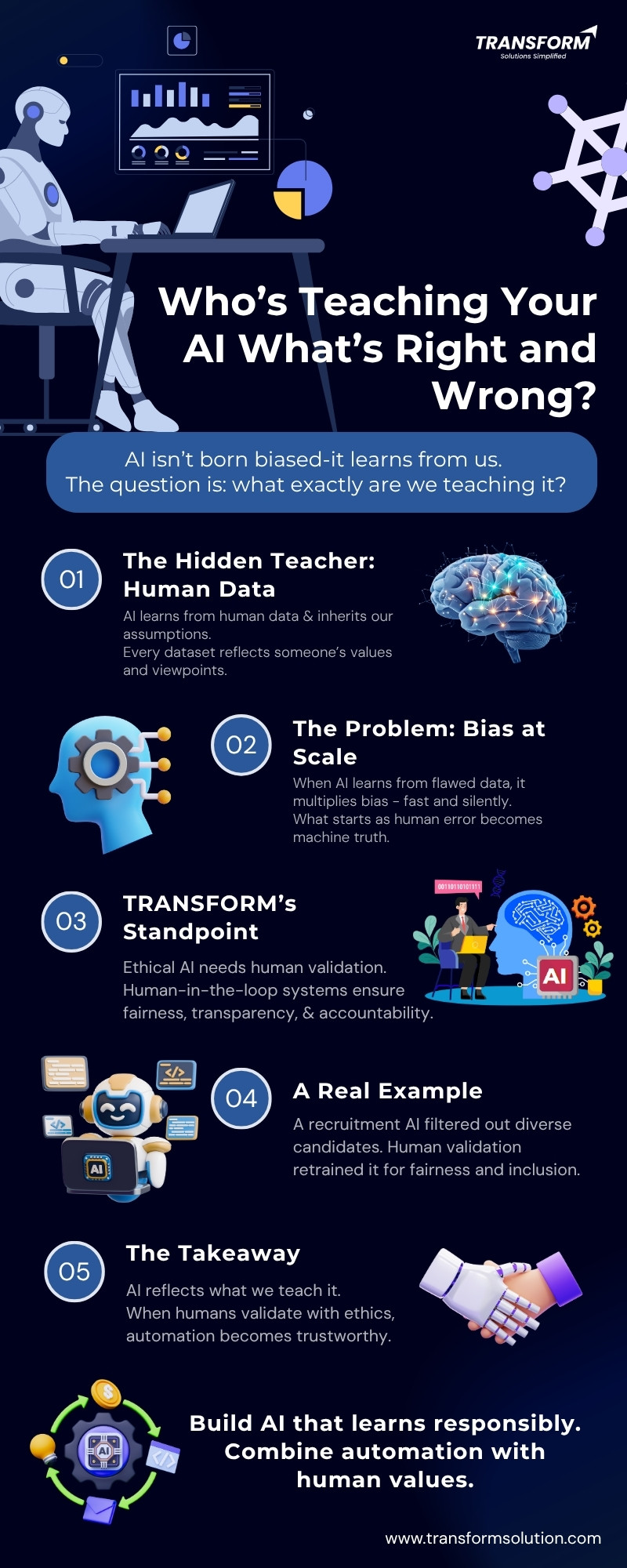

“AI isn’t born biased— it learns from us. The question is - what exactly are we teaching it?”

One of the most extreme areas across industries, artificial intelligence is transforming how individuals utilize technology and how organizations work. AI is rapidly becoming a key driver of decision-making powering everything from automated hiring systems that determine which candidates move forward to customer service chatbots handling thousands of inquiries each day.

A virtual assistant facilitates everyday tasks, predictive analytics guide strategic business planning, and advanced medical diagnostic technologies enable doctors to diagnose disease earlier and more specifically. However, there is a basic concern that underlies every AI answer, recommendation, and automated action-can we trust the lessons the AI is learning, and who is teaching it what is good, fair, or acceptable?

This is where AI governance comes in, acting as a framework to ensure the methods we set up demonstrate accountability, equity, and clarity rather than unconscious biases, outdated beliefs, or incorrect practices embedded in basic training data. Governance determines how AI should be taught, how its options should be followed, and how ethical regulations should be observed at every step of its development. Even though AI learns very quickly, it doesn't learn correctly unless humans purposefully validate that learning. Even the most refined technologies may heighten biases or automate harmful decisions at scale in the absence of adequate monitoring.

In this blog, we explain why responsible AI is more important than ever, how human-in-the-loop AI provides crucial oversight to identify mistakes before they become more serious, and why businesses should never rely solely on automation without robust ethical securities. Adopting careful governance is not only are commended practice but also a necessary prerequisite for developing reliable and human-centered technology, as AI continues to impact recruiting, healthcare, finance, marketing, and daily communication.

AI systems are neither fair nor impartial. They bring in the world as it is, not as it should be. The majority of AI systems are trained on massive datasets that have millions of words, photos, past decisions, and user interactions. These datasets frequently consist of:

● Biases based on culture

● Inequitable group representation

● Language-based stereotypes

● Previous biased rulings

● Incomplete or erroneous information

It implies that AI may intensify human bias.

● A hiring algorithm may overlook qualified candidates.

● Particular dialects could be misinterpreted by a customer service AI.

● A predictive model may unfairly single out specific neighborhoods.

● For underrepresented groups, a medical diagnostics model might perform poorly.

Thus, when individuals inquire, "Why does AI exhibit bias? The response is clear because AI is a model of the human mind.

For this reason, before executing any model at scale, AI governance, standards, and governance frameworks are required. Without them, automation starts to propagate injustice more quickly than it has in the past.

Numerous companies wrongly assume that AI can think for itself or make unemotional, rational decisions. However, AI lacks ethical insight. Fairness is unknown to it. It is not as experienced at understanding context as people are.

AI bias emerges as a result of:

● Inconsistent or denied datasets

● Insufficient context during instruction

● Human decisions are imprinted with historical bias.

● Algorithms that prioritize accuracy over morality

● Lack of a variety of viewpoints during development

The scary part?

● AI bias is automatically scaled.

● A biased human choice impacts one person at a time.

● A biased AI decision could immediately impact thousands of people.

For this reason, any digital transformation strategy must include ethical AI and AI transparency.

The framework known as AI governance establishes:

● How to train AI

● How to validate AI responses

● How to handle ethical risks

● How to keep an eye on prejudice

● How people ought to get involved in the system

Despite its power, AI still requires guidance. The human-in-the-loop (HITL) system is helpful in this case. In HITL AI, individuals always:

● Examine AI choices

● Correct output

● Eliminate bias

● Give background information.

● Evaluate the performance in various situations.

This ensures that fairness and precision are not compromised by AI automation.

Organizations aiming for efficiency often lean heavily toward full automation. However, the reality is - Automation and AI function best when people remain involved. Human supervision guarantees that:

● AI doesn't make bad choices for consumers.

● Brand values are aligned with AI replies.

● Standards of ethics are respected.

● The application of real-world context

● Errors are fixed before they become more serious.

HITL keeps your AI from becoming a liability or going rogue.

Let's discuss a case where a profitable AI system became an unfair gatekeeper due to insufficient governance.

To automate overview screening, a business deployed an AI-based recruitment forum. Lessening hiring time, enhancing efficiency, and weeding out unsuitable prospects were the goals.

Historical hiring data with biased trends was utilized to train the AI.

● Priority for applicants from specific colleges

● Female candidates are underrepresented in technology roles.

● Subjective selection decisions made by former bosses

● Linguistic and cultural characteristics unrelated to work performance

A few weeks ago, HR observed a problematic pattern. The AI was abandoning applications that didn't meet a specific type and declining applications from female applicants at an excessive rate. The system repeated the biases in earlier employment decisions.

To validate AI utilizing responsible AI techniques, a group of specialists intervened.

Auditing for Bias - They discovered that several keywords were unfairly associated with poor ratings after analyzing rejection trends.

Correcting Datasets - To guarantee fair representation, the training data was cleaned, varied, and rebalanced.

Additional Ethical Guidelines - Humane valuators added explicit standards about gender neutrality, skill evaluation, and job-relevant indicators.

Transparency in Contextual AI - The system was modified to display the reasons for resume rejections.

Constant Observation - Before making final decisions, a human review loop was implemented.

● Instead of using stylistic or demographic criteria, the AI rated candidates according to the relevance of their skills.

● The quality of hiring has improved.

● Diversity grew.

● Fairness became quantifiable and responsible.

Only because humans intervened, as moral barriers, was this change feasible.

Organizations are currently using AIs in practically every aspect of their operations. Customer service is powered by it, allowing the support team to respond faster and precisely. It improves productivity by automating repetitive processes and streamlining internal workflows.

By assessing consumer behavior and forecasting campaign results, AI enhances marketing optimization. While it filters applicants and improves personnel management in HR operations, it finds trends in financial analysis that humans would overlook. AI even powers sales automation, providing organizations with data-driven insights that enable them to close deals.

But setting AI into practice without ethical foundations is like building a tower without structural safety checks. At the same time, it may look amazing from the outside, but it is dangerously unbalanced in its core. Because of this, responsible AI is required.

But setting AI into practice without ethical foundations is like building a tower without structural foundation safety checks. At the same time, it may look amazing from the outside, but it is dangerously unbalanced in its core. Because of this, responsible AI is required.

AI that is responsible for guarantees:

Fairness - No discrimination or unfair results

Accountability - Unambiguous ownership of each AI-driven choice

Transparency - Users comprehend how and why AI acts in specific ways.

Safety - Systems prevent unsafe or unexpected consequences.

Compliance - Adherence to changing regulations, laws, and industry standards.

Responsible AI is the cornerstone for maintaining long-term trust in automation and ensuring that AI helps everyone safely and equally. It is neither a catchphrase nor a trend.

Clients and employees want to learn how these methods make decisions as they become more aware of the extent to which AI impacts their day-to-day lives. Individuals feel more secure when the logic behind AI is transparent and comprehensible, whether it's an AI answer generator, a chatbot that interacts with clients, or a refined forecast model utilized for hiring or financial review. Transparent AI enables trust by allowing users to believe the system is genuine and reliable. On the other hand, unclear AI increases suspicions about unseen biases, unfair treatment, or unexplained outcomes.

For this reason, clarity in AI is essential. Having the ability to trace -

● Which information did the AI learn from?

● How was a specific choice made?

● What laws or logic govern its reactions?

It is crucial for building trust, particularly when AI influences essential decisions such as loan approvals, job applications, medical insights, and customer service decisions. People feel more powerful, respected, and willing to rely on AI-driven systems when they understand the logic underlying automated behaviors.

Whether they are enterprise-level systems or free applications, AI response generators may produce dependable results quickly. They can:

● Draft emails

● Provide customer support

● Generate AI text responses

● Handle leads

● Personalize content

● Automate repetitive communication

However, in the absence of governance, a paid system or even a free AI response generator may produce -

● Biased answers

● False information

● Inappropriate or sensitive content

● Choices that go against rules or moral principles

Because of this, AI governance needs to be incorporated into all automation stages, particularly in systems that interactwith customers.

The foundation of intelligent, trustworthy AI is ethics. A strong framework for AI ethics consists of:

● Privacy protection

● Non-discrimination

● Fair decision-making

● Accountability

● Transparency

● Security

Ethical AI provides that automation doesn’t compromise fairness or human dignity. Organizations that drive claims about utilizing AI responsibly are actually committing to AI ethics and governance, which are the rules and regulations that support safe automation.

AI is advancing so rapidly that numerous companies are working to keep up with the latest methods. This is the point at which professional help is required. In addition to deploying tools, a progressive AI automation company guarantees -

● Frameworks for governance are established

● Bias is tracked and addressed.

● There are active human-in-the-loop systems.

● Maintaining ethical compliance

● AI continues to align with the brand's values and voice.

● Models change responsibly throughout time.

Because automation without values is negligence, not creation.

Ethical monitoring is essential in a world where AI is utilized in recruiting, healthcare, finance, communication, and day-to-day processes. And that's where having the right partner is essential for your business. At TRANSFORM Solution, we create AI that respects people, supports justice, and produces reliable outcomes. From responsible automation to governance frameworks, from AI validation to transparency-driven AI deployment, we ensure the AI in your company reflects intellect and integrity rather than harmful presumptions or hidden bias.

Trust TRANSFORM Solutions to be your partner in operational transformation.

Book a free consutation